The capability of an AI to recall user interactions is not merely a feature but a foundational component that enhances the system’s efficacy, especially in educational applications. Persistent memory in AI models allows these systems to provide responses that are not only accurate but also contextually appropriate, creating a more personalized learning environment. This article delves into how AI models manage and utilize memory, ensuring each student interaction is leveraged to refine and perfect subsequent responses. The focus here is on the sophisticated memory mechanisms that enable continuous learning and adaptation within AI systems.

Architectural Overview of AI Memory Systems

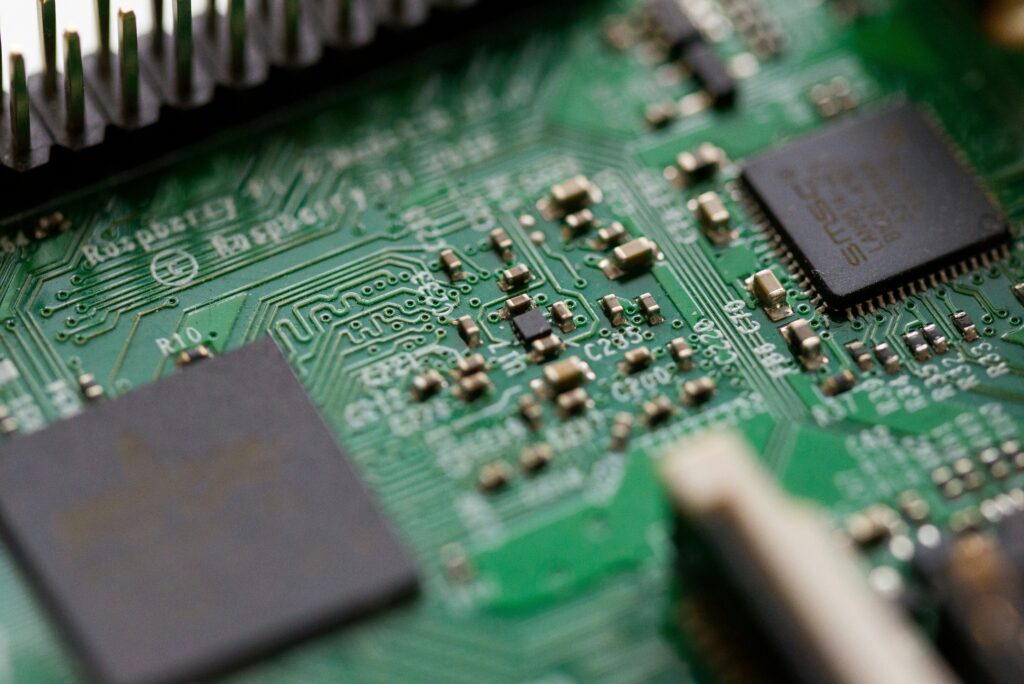

The architecture of AI memory systems is pivotal in how these technologies process and retain vast amounts of information. At the heart of this capability are neural network designs like Long Short-Term Memory (LSTM) units and Transformers, which are adept at handling sequence prediction problems and maintaining context over long interaction periods. They are equipped with mechanisms that allow for the selective remembering and forgetting of information, which is crucial for maintaining the relevance and accuracy of the information stored.

Moreover, the integration of these AI architectures into educational platforms must be handled with precision to ensure they function as intended. This is evident in the development of AI-driven tools for who can write my paper for cheap, which utilize these memory architectures to provide students with writing support that adapts to their evolving needs and preferences, effectively learning from each interaction to offer more tailored guidance in subsequent sessions.

Mechanisms of Data Retention

AI models employ various methods to retain and recall data effectively. This retention is crucial for ensuring continuity in interactions, particularly in an educational context where the progression of learning must be seamless. Two primary techniques dominate the landscape: parameter updating and the establishment of long-term memory.

Parameter updating involves adjusting the weights within the AI’s neural network based on new information received and feedback from its outputs. This method ensures that the model gradually improves and adapts to the student’s learning style and needs. For example, if a student consistently struggles with a specific type of problem, the AI can learn to anticipate these difficulties and adjust its instructional approach accordingly.

Long-term memory techniques, on the other hand, are more about storing information that is not immediately necessary but might be relevant in the future. Techniques such as embedding vectors and external memory modules help AI systems store and retrieve vast amounts of data without compromising the performance of the neural network. This kind of memory is vital for AI tutors that need to remember previous academic performances to tailor their teaching strategies effectively.

Privacy and Ethical Considerations

As AI systems become more integrated into educational environments, the issue of privacy and ethical use of memory becomes increasingly significant. Remembering student interactions raises substantial concerns about data security and the potential for misuse of sensitive information.

To address these concerns, developers and educators must implement stringent data protection measures and ensure compliance with educational privacy laws such as FERPA (Family Educational Rights and Privacy Act) in the United States or GDPR (General Data Protection Regulation) in Europe. These regulations dictate how student information can be collected, stored, and used, ensuring that data retention does not infringe on individual privacy.

Furthermore, the ethical handling of this data is paramount. AI systems must be designed to forget certain types of information, such as inadvertent personal disclosures that are not relevant to the educational content. This selective forgetting is crucial not only for ethical reasons but also for maintaining the trust of users, ensuring that the AI system is seen as a safe and beneficial tool for education rather than a surveillance mechanism.

Improving Accuracy and Relevance

One of the key benefits of memory in AI models is the significant enhancement of accuracy and relevance in the responses they provide. This improvement is chiefly due to the model’s ability to retain context over ongoing interactions, which allows for a deeper understanding of the user’s needs and learning progress. For example, an AI tutor can remember a student’s past difficulties with a subject and preemptively offer targeted advice or resources in future sessions.

The impact of this capability is vividly demonstrated in various case studies where AI systems have been deployed in educational settings. For instance, AI tutors that remember student preferences and learning history have been shown to increase engagement and improve learning outcomes by delivering personalized content that students find more comprehensible and relatable. Additionally, these systems can track the effectiveness of certain teaching approaches for different topics, continuously adapting to provide the most effective instruction for each student.

Challenges in Memory Management

Despite the advantages, managing memory in AI systems is fraught with challenges. Key issues include memory decay, where the relevance of stored information diminishes over time, and data corruption, which can lead to inaccurate or inappropriate responses. Moreover, AI must deal with the handling of outdated or incorrect information that could mislead or confuse users.

To address these issues, developers employ various strategies. Regular updates and retraining cycles can refresh an AI’s memory and align it with the latest information and educational standards. Additionally, mechanisms to verify the accuracy and relevance of retained data help maintain the integrity of the system’s outputs. These approaches ensure that AI remains a reliable tool for education, capable of adapting to both the evolving curriculum and the individual learner’s needs.

Future Trends in AI Memory

Looking ahead, the future of AI memory in educational contexts promises even greater integration and sophistication. Advances in neural network architectures and learning algorithms are expected to enhance the efficiency and capacity of AI memory systems. For example, developments in federated learning could enable AI models to learn from a vast array of decentralized data sources while respecting privacy concerns, potentially revolutionizing how personalized learning experiences are delivered.

Conclusion

The journey of AI memory in education is one of continuous improvement and adaptation. As AI systems become more capable of remembering and utilizing the wealth of data generated through student interactions, their role in education will undoubtedly expand. These advancements will not only enhance the accuracy and relevance of AI responses but also address key challenges such as privacy and data integrity.